New algorithm encourages robots to move more randomly to collect more diverse data for learning. In tests, robots started with no knowledge and then learned and correctly performed tasks within a single attempt. New model could improve safety and practicality of self-driving cars, delivery drones and more.

Tag Archives: AI News

Research Focus: Week of April 29, 2024

Welcome to Research Focus, a series of blog posts that highlights notable publications, events, code/datasets, new hires and other milestones from across the research community at Microsoft.

NEW RESEARCH

Can Large Language Models Transform Natural Language Intent into Formal Method Postconditions?

Informal natural language that describes code functionality, such as code comments or function documentation, may contain substantial information about a program’s intent. However, there is no guarantee that a program’s implementation aligns with its natural language documentation. In the case of a conflict, leveraging information in code-adjacent natural language has the potential to enhance fault localization, debugging, and code trustworthiness. However, this information is often underutilized, due to the inherent ambiguity of natural language which makes natural language intent challenging to check programmatically. The “emergent abilities” of large language models (LLMs) have the potential to facilitate the translation of natural language intent to programmatically checkable assertions. However, due to a lack of benchmarks and evaluation metrics, it is unclear if LLMs can correctly translate informal natural language specifications into formal specifications that match programmer intent—and whether such translation could be useful in practice.

In a new paper: Can Large Language Models Transform Natural Language Intent into Formal Method Postconditions? (opens in new tab), researchers from Microsoft describe nl2postcond, the problem leveraging LLMs for transforming informal natural language to formal method postconditions, expressed as program assertions. The paper, to be presented at the upcoming ACM International Conference on the Foundations of Software Engineering (opens in new tab), introduces and validates metrics to measure and compare different nl2postcond approaches, using the correctness and discriminative power of generated postconditions. The researchers show that nl2postcond via LLMs has the potential to be helpful in practice by demonstrating that LLM-generated specifications can be used to discover historical bugs in real-world projects.

NEW RESEARCH

Semantically Aligned Question and Code Generation for Automated Insight Generation

People who work with data, like engineers, analysts, and data scientists, often must manually look through data to find valuable insights or write complex scripts to automate exploration of the data. Automated insight generation provides these workers the opportunity to immediately glean insights about their data and identify valuable starting places for writing their exploration scripts. Unfortunately, automated insights produced by LLMs can sometimes generate code that does not correctly correspond (or align) to the insight. In a recent paper: Semantically Aligned Question and Code Generation for Automated Insight Generation (opens in new tab), researchers from Microsoft leverage the semantic knowledge of LLMs to generate targeted and insightful questions about data and the corresponding code to answer those questions. Through an empirical study on data from Open-WikiTable (opens in new tab), they then show that embeddings can be effectively used for filtering out semantically unaligned pairs of question and code. The research also shows that generating questions and code together yields more interesting and diverse insights about data.

NEW RESEARCH

Explaining CLIP’s performance disparities on data from blind/low vision users

AI-based applications hold the potential to assist people who are blind or low vision (BLV) with everyday visual tasks. However, human assistance is often required, due to the wide variety of assistance needed and varying quality of images available. Recent advances in large multi-modal models (LMMs) could potentially address these challenges, enabling a new era of automated visual assistance. Yet, little work has been done to evaluate how well LMMs perform on data from BLV users.

In a recent paper: Explaining CLIP’s performance disparities on data from blind/low vision users (opens in new tab), researchers from Microsoft and the World Bank address this issue by assessing CLIP (opens in new tab), a widely-used LMM with potential to underpin many assistive technologies. Testing 25 CLIP variants in a zero-shot classification task, their results show that disability objects, like guide canes and Braille displays, are recognized significantly less accurately than common objects, like TV remote controls and coffee mugs—in some cases by up to 28 percentage points difference.

The researchers perform an analysis of the captions in three large-scale datasets that are commonly used to train models like CLIP and show that BLV-related content (such as guide canes) is rarely mentioned. This is a potential reason for the large performance gaps. The researchers show that a few-shot learning approach with as little as five example images of a disability object can improve its ability to recognize that object, holding the potential to mitigate CLIP’s performance disparities for BLV users. They then discuss other possible mitigations.

Spotlight: AI-POWERED EXPERIENCE

Microsoft research copilot experience

Discover more about research at Microsoft through our AI-powered experience

Opens in a new tab

NEW RESEARCH

Closed-Form Bounds for DP-SGD against Record-level Inference

Privacy of training data is a central consideration when deploying machine learning (ML) models. Models trained with guarantees of differential privacy (DP) provably resist a wide range of attacks. Although it is possible to derive bounds, or safe limits, for specific privacy threats solely from DP guarantees, meaningful bounds require impractically small privacy budgets, which results in a large loss in utility.

In a recent paper: Closed-Form Bounds for DP-SGD against Record-level Inference, researchers from Microsoft present a new approach to quantify the privacy of ML models against membership inference (inferring whether a data record is in the training data) and attribute inference (reconstructing partial information about a record) without the indirection through DP. They focus on the popular DP-SGD algorithm, which they model as an information theoretic channel whose inputs are the secrets that an attacker wants to infer (e.g., membership of a data record) and whose outputs are the intermediate model parameters produced by iterative optimization. They obtain closed-form bounds for membership inference that match state-of-the-art techniques but are orders of magnitude faster to compute. They also present the first algorithm to produce data-dependent bounds against attribute inference. Compared to bounds computed indirectly through numerical DP budget accountants, these bounds provide a tighter characterization of the privacy risk of deploying an ML model trained on a specific dataset. This research provides a direct, interpretable, and practical way to evaluate the privacy of trained models against inference threats without sacrificing utility.

Microsoft Research in the news

TIME100 Most Influential People in Health

TIME | May 2, 2024

Microsoft Research president Peter Lee is included as an innovator on the 2024 TIME100 Health list, TIME’s inaugural list of 100 individuals who most influenced global health this year.

Sanctuary AI Announces Microsoft Collaboration to Accelerate AI Development for General Purpose Robots

Sanctuary AI | May 1, 2024

Sanctuary AI and Microsoft are collaborating on the development of AI models for general purpose humanoid robots. Sanctuary AI will leverage Microsoft’s Azure cloud resources for their AI workloads.

Tiny but mighty: The Phi-3 small language models with big potential

Microsoft Source | April 23, 2024

LLMs create exciting opportunities for AI to boost productivity and creativity. But they require significant computing resources. Phi-3 models, which perform better than models twice their size, are now publicly available from Microsoft.

AI Is Unearthing New Drug Candidates, But It Still Needs Human Oversight

Drug Discovery Online | April 11, 2024

Drug Discovery Online published a contributed article from Junaid Bajwa discussing how recent advancements in AI offer the potential to streamline and optimize drug development in unprecedented ways.

How AI is helping create sustainable farms of the future

The Grocer | April 16, 2024

Ranveer Chandra authored an essay on how AI is helping create sustainable farms of the future for UK-based trade outlet, The Grocer.

The Future of AI and Mental Health

Psychiatry Online | April 16, 2024

Psychiatric News published an article featuring Q&A with Jina Suh, highlighting the important considerations for the use of AI technologies among psychiatrists and mental health professionals.

MatterGen’s Breakthroughs: How AI Shapes the Future of Materials Science

Turing Post | April 19, 2024

Turing Post covered MatterGen in an interview with Tian Xie. Learn more about this impactful generative model for inorganic materials design.

Machine Learning Street Talk interview with Chris Bishop

Machine Learning Street Talk | April 10, 2024

Chris Bishop joined Dr. Tim Scarfe for a wide-ranging interview on advances in deep learning and AI for science.

Opens in a new tab

The post Research Focus: Week of April 29, 2024 appeared first on Microsoft Research.

Revolutionize Customer Satisfaction with tailored reward models for your business on Amazon SageMaker

As more powerful large language models (LLMs) are used to perform a variety of tasks with greater accuracy, the number of applications and services that are being built with generative artificial intelligence (AI) is also growing. With great power comes responsibility, and organizations want to make sure that these LLMs produce responses that align with their organizational values and provide the same unique experience they always intended for their end-customers.

Evaluating AI-generated responses presents challenges. This post discusses techniques to align them with company values and build a custom reward model using Amazon SageMaker. By doing so, you can provide customized customer experiences that uniquely reflect your organization’s brand identity and ethos.

Challenges with out-of-the-box LLMs

Out-of-the-box LLMs provide high accuracy, but often lack customization for an organization’s specific needs and end-users. Human feedback varies in subjectivity across organizations and customer segments. Collecting diverse, subjective human feedback to refine LLMs is time-consuming and unscalable.

This post showcases a reward modeling technique to efficiently customize LLMs for an organization by programmatically defining rewards functions that capture preferences for model behavior. We demonstrate an approach to deliver LLM results tailored to an organization without intensive, continual human judgement. The techniques aim to overcome customization and scalability challenges by encoding an organization’s subjective quality standards into a reward model that guides the LLM to generate preferable outputs.

Objective vs. subjective human feedback

Not all human feedback is the same. We can categorize human feedback into two types: objective and subjective.

Any human being who is asked to judge the color of the following boxes would confirm that the left one is a white box and right one is a black box. This is objective, and there are no changes to it whatsoever.

Determining whether an AI model’s output is “great” is inherently subjective. Consider the following color spectrum. If asked to describe the colors on the ends, people would provide varied, subjective responses based on their perceptions. One person’s white may be another’s gray.

This subjectivity poses a challenge for improving AI through human feedback. Unlike objective right/wrong feedback, subjective preferences are nuanced and personalized. The same output could elicit praise from one person and criticism from another. The key is acknowledging and accounting for the fundamental subjectivity of human preferences in AI training. Rather than seeking elusive objective truths, we must provide models exposure to the colorful diversity of human subjective judgment.

Unlike traditional model tasks such as classification, which can be neatly benchmarked on test datasets, assessing the quality of a sprawling conversational agent is highly subjective. One human’s riveting prose is another’s aimless drivel. So how should we refine these expansive language models when humans intrinsically disagree on the hallmarks of a “good” response?

The key is gathering feedback from a diverse crowd. With enough subjective viewpoints, patterns emerge on engaging discourse, logical coherence, and harmless content. Models can then be tuned based on broader human preferences. There is a general perception that reward models are often associated only with Reinforcement Learning from Human Feedback (RLHF). Reward modeling, in fact, goes beyond RLHF, and can be a powerful tool for aligning AI-generated responses with an organization’s specific values and brand identity.

Reward modeling

You can choose an LLM and have it generate numerous responses to diverse prompts, and then your human labelers will rank those responses. It’s important to have diversity in human labelers. Clear labeling guidelines are critical. Without explicit criteria, judgments can become arbitrary. Useful dimensions include coherence, relevance, creativity, factual correctness, logical consistency, and more. Human labelers put these responses into categories and label them favorite to least favorite, as shown in the following example. This example showcases how different humans perceive these possible responses from the LLM in terms of their most favorite (labeled as 1 in this case) and least favorite (labeled as 3 in this case). Each column is labeled 1, 2, or 3 from each human to signify their most preferred and least preferred response from the LLM.

By compiling these subjective ratings, patterns emerge on what resonates across readers. The aggregated human feedback essentially trains a separate reward model on writing qualities that appeal to people. This technique of distilling crowd perspectives into an AI reward function is called reward modeling. It provides a method to improve LLM output quality based on diverse subjective viewpoints.

Solution overview

In this post, we detail how to train a reward model based on organization-specific human labeling feedback collected for various prompts tested on the base FM. The following diagram illustrates the solution architecture.

For more details, see the accompanying notebook.

Prerequisites

To successfully train a reward model, you need the following:

A large dataset with prompts and ranked responses from human labelers that reflects your organizational and end-user needs. For this post, we store the dataset in an Amazon Simple Storage Service (Amazon S3) bucket.

A small language model with a numerical head like OPT-2.7b, Falcon 7b (a decoder-only model of approximately 6 GB is good enough).

A mechanism to run distributed training. For this post, we use SageMaker.

An AWS Identity and Access Management (IAM) role associated with the Amazon SageMaker Studio user profile that has access to the S3 bucket holding the curated dataset. The standard SageMaker IAM role will suffice for this post. Refer to Amazon SageMaker Identity-Based Policy Examples for guidance on best practices and examples of identity-based policies for SageMaker.

A SageMaker domain. You can quickly spin up a SageMaker domain and set up a single user for launching the SageMaker Studio notebook environment you’ll need to complete the model training. For instructions on setting up your environment, see Quick onboard to Amazon SageMaker domain.

Launch SageMaker Studio

Complete the following steps to launch SageMaker Studio:

On the SageMaker console, choose Studio in the navigation pane.

On the Studio landing page, select the domain and user profile for launching Studio.

Choose Open Studio.

To launch SageMaker Studio, choose Launch personal Studio.

Let’s see how to create a reward model locally in a SageMaker Studio notebook environment by using a pre-existing model from the Hugging Face model hub.

Prepare a human-labeled dataset and train a reward model

When doing reward modeling, getting feedback data from humans can be expensive. This is because reward modeling needs feedback from other human workers instead of only using data collected during regular system use. How well your reward model behaves depends on the quality and amount of feedback from humans.

We recommend using AWS-managed offerings such as Amazon SageMaker Ground Truth. It offers the most comprehensive set of human-in-the-loop capabilities, allowing you to harness the power of human feedback across the machine learning (ML) lifecycle to improve the accuracy and relevancy of models. You can complete a variety of human-in-the-loop tasks with SageMaker Ground Truth, from data generation and annotation to model review, customization, and evaluation, either through a self-service or AWS-managed offering.

For this post, we use the IMDB dataset to train a reward model that provides a higher score for text that humans have labeled as positive, and a lower score for negative text.

We prepare the dataset with the following code:

The following example shows a sample record from the prepared dataset, which includes references to rejected and chosen responses. We have also embedded the input ID and attention mask for the chosen and rejected responses.

Load the pre-trained model

In this case, we use the OPT-1.3b (Open Pre-trained Transformer Language Model) model in Amazon SageMaker JumpStart from Hugging Face. If you want to do all of the training locally on your notebook instead of distributed training, you need to use an instance with enough accelerator memory. We run the following training on a notebook running on ml.g4dn.xlarge instance type:

Define the custom trainer function

In the following code snippet, we create a custom trainer that calculates how well a model is performing on a task:

It compares the model’s results for two sets of input data: one set that was chosen and another set that was rejected. The trainer then uses these results to figure out how good the model is at distinguishing between the chosen and rejected data. This helps the trainer adjust the model to improve its performance on the task. The CustomTrainer class is used to create a specialized trainer that calculates the loss function for a specific task involving chosen and rejected input sequences. This custom trainer extends the functionality of the standard Trainer class provided by the transformers library, allowing for a tailored approach to handling model outputs and loss computation based on the specific requirements of the task. See the following code:

The TrainingArguments in the provided code snippet are used to configure various aspects of the training process for an ML model. Let’s break down the purpose of each parameter, and how they can influence the training outcome:

output_dir – Specifies the directory where the trained model and associated files will be saved. This parameter helps organize and store the trained model for future use.

overwrite_output_dir – Determines whether to overwrite the output directory if it already exists. Setting this to True allows for reusing the same directory without manual deletion.

do_train – Indicates whether to perform training. If set to True, the model will be trained using the provided training dataset.

do_eval and do_predict – Control whether to perform evaluation and prediction tasks, respectively. In this case, both are set to False, meaning only training will be conducted.

evaluation_strategy – Defines when evaluation should be performed during training. Setting it to “no” means evaluation will not be done during training.

learning_rate – Specifies the learning rate for the optimizer, influencing how quickly or slowly the model learns from the data.

num_train_epochs – Sets the number of times the model will go through the entire training dataset during training. One epoch means one complete pass through all training samples.

per_device_train_batch_size – Determines how many samples are processed in each batch during training on each device (for example, GPU). A smaller batch size can lead to slower but more stable training.

gradient_accumulation_steps – Controls how often gradients are accumulated before updating the model’s parameters. This can help stabilize training with large batch sizes.

remove_unused_columns – Specifies whether unused columns in the dataset should be removed before processing, optimizing memory usage.

By configuring these parameters in the TrainingArguments, you can influence various aspects of the training process, such as model performance, convergence speed, memory usage, and overall training outcome based on your specific requirements and constraints.

When you run this code, it trains the reward model based on the numerical representation of subjective feedback you gathered from the human labelers. A trained reward model will give a higher score to LLM responses that humans are more likely to prefer.

Use the reward model to evaluate the base LLM

You can now feed the response from your LLM to this reward model, and the numerical score produced as output informs you of how well the response from the LLM is aligning to the subjective organization preferences that were embedded on the reward model. The following diagram illustrates this process. You can use this number as the threshold for deciding whether or not the response from the LLM can be shared with the end-user.

For example, let’s say we created an reward model to avoiding toxic, harmful, or inappropriate content. If a chatbot powered by an LLM produces a response, the reward model can then score the chatbot’s responses. Responses with scores above a pre-determined threshold are deemed acceptable to share with users. Scores below the threshold mean the content should be blocked. This lets us automatically filter chatbot content that doesn’t meet standards we want to enforce. To explore more, see the accompanying notebook.

Clean up

To avoid incurring future charges, delete all the resources that you created. Delete the deployed SageMaker models, if any, and stop the SageMaker Studio notebook you launched for this exercise.

Conclusion

In this post, we showed how to train a reward model that predicts a human preference score from the LLM’s response. This is done by generating several outputs for each prompt with the LLM, then asking human annotators to rank or score the responses to each prompt. The reward model is then trained to predict the human preference score from the LLM’s response. After the reward model is trained, you can use the reward model to evaluate the LLM’s responses against your subjective organizational standards.

As an organization evolves, the reward functions must evolve alongside changing organizational values and user expectations. What defines a “great” AI output is subjective and transforming. Organizations need flexible ML pipelines that continually retrain reward models with updated rewards reflecting latest priorities and needs. This space is continuously evolving: direct preference-based policy optimization, tool-augmented reward modeling, and example-based control are other popular alternative techniques to align AI systems with human values and goals.

We invite you to take the next step in customizing your AI solutions by engaging with the diverse and subjective perspectives of human feedback. Embrace the power of reward modeling to ensure your AI systems resonate with your brand identity and deliver the exceptional experiences your customers deserve. Start refining your AI models today with Amazon SageMaker and join the vanguard of businesses setting new standards in personalized customer interactions. If you have any questions or feedback, please leave them in the comments section.

About the Author

Dinesh Kumar Subramani is a Senior Solutions Architect based in Edinburgh, Scotland. He specializes in artificial intelligence and machine learning, and is member of technical field community with in Amazon. Dinesh works closely with UK Central Government customers to solve their problems using AWS services. Outside of work, Dinesh enjoys spending quality time with his family, playing chess, and exploring a diverse range of music.

Amazon Personalize launches new recipes supporting larger item catalogs with lower latency

Personalized customer experiences are essential for engaging today’s users. However, delivering truly personalized experiences that adapt to changes in user behavior can be both challenging and time-consuming. Amazon Personalize makes it straightforward to personalize your website, app, emails, and more, using the same machine learning (ML) technology used by Amazon, without requiring ML expertise. With the recipes—algorithms for specific uses cases—provided by Amazon Personalize, you can deliver a wide array of personalization, including product or content recommendations and personalized ranking.

Today, we are excited to announce the general availability of two advanced recipes in Amazon Personalize, User-Personalization-v2 and Personalized-Ranking-v2 (v2 recipes), which are built on the cutting-edge Transformers architecture to support larger item catalogs with lower latency.

In this post, we summarize the new enhancements, and guide you through the process of training a model and providing recommendations for your users.

Benefits of new recipes

The new recipes offer enhancements in scalability, latency, model performance, and functionality.

Enhanced scalability – The new recipes now support training with up to 5 million item catalogs and 3 billion interactions, empowering personalization for large catalogs and platforms with billions of usage events.

Lower latency – The lower inference latency and faster training times for large datasets of these new recipes can reduce the delay for your end-users.

Performance optimization – Amazon Personalize testing showed that v2 recipes improved recommendation accuracy by up to 9% and recommendation coverage by up to 1.8x compared to previous versions. A higher coverage means Amazon Personalize recommends more of your catalog.

Return item metadata in inference responses – The new recipes enable item metadata by default without extra charge, allowing you to return metadata such as genres, descriptions, and availability in inference responses. This can help you enrich recommendations in your user interfaces without extra work. If you use Amazon Personalize with generative AI, you can also feed the metadata into prompts. Providing more context to large language models can help them gain a deeper understanding of product attributes to generate more relevant content.

Highly automated operations – Our new recipes are designed to reduce your overhead for training and tuning the model. For example, Amazon Personalize simplifies training configuration and automatically selects the optimal settings for your custom models behind the scenes.

Solution overview

To use the User-Personalization-v2 and Personalized-Ranking-v2 recipes, you first need to set up Amazon Personalize resources. Create your dataset group, import your data, train a solution version, and deploy a campaign. For full instructions, see Getting started.

For this post, we follow the Amazon Personalize console approach to deploy a campaign. Alternatively, you can build the entire solution using the SDK approach. You can also get batch recommendations with an asynchronous batch flow. We use the MovieLens public dataset and User-Personalization-v2 recipe to show you the workflow.

Prepare the dataset

Complete the following steps to prepare your dataset:

Create a dataset group. Each dataset group can contain up to three datasets: users, items, and interactions, with the interactions dataset being mandatory for User-Personalization-v2 and Personalized-Ranking-v2.

Create an interactions dataset using a schema.

Import the interactions data to Amazon Personalize from Amazon Simple Storage Service (Amazon S3).

Train a model

After the dataset import job is complete, you can analyze data before training. Amazon Personalize Data analysis shows you statistics about your data as well as actions you can take to meet training requirements and improve recommendations.

Now you’re ready to train your model.

On the Amazon Personalize console, choose Dataset groups in the navigation pane.

Choose your dataset group.

Choose Create solutions.

For Solution name, enter your solution name.

For Solution type, select Item recommendation.

For Recipe, choose the new aws-user-personalization-v2 recipe.

In the Training configuration section, for Automatic training, select Turn on to maintain the effectiveness of your model by retraining it on a regular cadence.

Under Hyperparameter configuration, select Apply recency bias. Recency bias determines whether the model should give more weight to the most recent item interactions data in your interactions dataset.

Choose Create solution.

If you turned on automatic training, Amazon Personalize will automatically create your first solution version. A solution version refers to a trained ML model. When a solution version is created for the solution, Amazon Personalize trains the model backing the solution version based on the recipe and training configuration. It can take up to 1 hour for the solution version creation to start.

Under Custom resources in the navigation pane, choose Campaigns.

Choose Create campaign.

A campaign deploys a solution version (trained model) to generate real-time recommendations. Campaigns created with solutions trained on v2 recipes are automatically opted-in to include item metadata in recommendation results. You can choose metadata columns during an inference call.

Provide your campaign details and create your campaign.

Get recommendations

After you create or update your campaign, you can get a recommended list of items that users are more likely to interact with, sorted from highest to lowest.

Select the campaign and View details.

In the Test campaign results section, enter the User ID and choose Get recommendations.

The following table shows a recommendation result for a user that includes the recommended items, relevance score, and item metadata (Title and Genre).

Your User-Personalization-v2 campaign is now ready to feed into your website or app and personalize the journey of each of your customers.

Clean up

Make sure you clean up any unused resources you created in your account while following the steps outlined in this post. You can delete campaigns, datasets, and dataset groups via the Amazon Personalize console or using the Python SDK.

Conclusion

The new Amazon Personalize User-Personalization-v2 and Personalized-Ranking-v2 recipes take personalization to the next level with support of larger item catalogs, reduced latency, and optimized performance. For more information about Amazon Personalize, see the Amazon Personalize Developer Guide.

About the Authors

Jingwen Hu is a Senior Technical Product Manager working with AWS AI/ML on the Amazon Personalize team. In her spare time, she enjoys traveling and exploring local food.

Daniel Foley is a Senior Product Manager for Amazon Personalize. He is focused on building applications that leverage artificial intelligence to solve our customers’ largest challenges. Outside of work, Dan is an avid skier and hiker.

Pranesh Anubhav is a Senior Software Engineer for Amazon Personalize. He is passionate about designing machine learning systems to serve customers at scale. Outside of his work, he loves playing soccer and is an avid follower of Real Madrid.

Tianmin Liu is a senior software engineer working for Amazon personalize. He focuses on developing recommender systems at scale using various machine learning algorithms. In his spare time, he likes playing video games, watching sports, and playing the piano.

Abhishek Mangal is a software engineer working for Amazon Personalize. He works on developing recommender systems at scale using various machine learning algorithms. In his spare time, he likes to watch anime and believes One Piece is the greatest piece of storytelling in recent history.

Yifei Ma is a Senior Applied Scientist at AWS AI Labs working on recommender systems. His research interests lie in active learning, generative models, time series analysis, and online decision-making. Outside of work, he is an aviation enthusiast.

Hao Ding is a Senior Applied Scientist at AWS AI Labs and is working on advancing the recommender system for Amazon Personalize. His research interests lie in recommendation foundation models, Bayesian deep learning, large language models, and their applications in recommendation.

Rishabh Agrawal is a Senior Software Engineer working on AI services at AWS. In his spare time, he enjoys hiking, traveling and reading.

Get started with Amazon Titan Text Embeddings V2: A new state-of-the-art embeddings model on Amazon Bedrock

Embeddings are integral to various natural language processing (NLP) applications, and their quality is crucial for optimal performance. They are commonly used in knowledge bases to represent textual data as dense vectors, enabling efficient similarity search and retrieval. In Retrieval Augmented Generation (RAG), embeddings are used to retrieve relevant passages from a corpus to provide context for language models to generate informed, knowledge-grounded responses. Embeddings also play a key role in personalization and recommendation systems by representing user preferences, item characteristics, and historical interactions as vectors, allowing calculation of similarities for personalized recommendations based on user behavior and item embeddings. As new embedding models are released with incremental quality improvements, organizations must weigh the potential benefits against the associated costs of upgrading, considering factors like computational resources, data reprocessing, integration efforts, and projected performance gains impacting business metrics.

In September of 2023, we announced the launch of Amazon Titan Text Embeddings V1, a multilingual text embeddings model that converts text inputs like single words, phrases, or large documents into high-dimensional numerical vector representations. Since then, many of our customers have used the V1 model, which supported over 25 languages, with an input up to 8,192 tokens and outputs vector of 1,536 dimensions for high accuracy and low latency. The model was made available as a serverless offering via Amazon Bedrock, simplifying embedding generation and integration with downstream applications. We published a follow-up post on January 31, 2024, and provided code examples using AWS SDKs and LangChain, showcasing a Streamlit semantic search app.

Today, we are happy to announce Amazon Titan Text Embeddings V2, our second-generation embeddings model for Amazon Bedrock. The new model is optimized for the most common use cases we see with many of our active customers, including RAG, multi-language, and code embedding use cases. The following table summarizes the key differences compared to V1.

Feature

Amazon Titan Text Embeddings V1

Amazon Titan Text Embeddings V2

Output dimension support

1536

256, 512, 1024

Language support

25+

100+

Unit vector normalization support

No

Yes

Price per million tokens

$0.10

$0.02 per 1 million tokens, or $0.00002 per 1,000 tokens

With these new features, we expect many more customers choosing Amazon Titan Text Embeddings V2 to build common generative artificial intelligence (AI) applications. In this post, we discuss the benefits of the V2 model, how to conduct your own evaluation of the model, and how to migrate to using the new model.

Let’s dig in!

Benefits of Amazon Titan Text Embeddings V2

Amazon Titan Text Embeddings V2 is the second-generation embedding model for Amazon Bedrock, optimized for some of the most common customer use cases we have seen with our customers. Some of the key features include:

Optimized for RAG solutions

Flexible embedding sizes

Improved multilingual support and code

Embeddings have become an integral part of various NLP applications, and their quality is crucial for achieving optimal performance.

The large language model (LLM) landscape is rapidly evolving, with leading providers offering increasingly powerful and versatile embedding models. Although incremental improvements in embedding quality may seem modest at the high level, the actual benefits can be significant for specific use cases. For example, in a recommendation system for a large ecommerce platform, a modest increase in recommendation accuracy could translate into significant additional revenue.

A common way to select an embedding model (or any model) is to look at public benchmarks; an accepted benchmark for measuring embedding quality is the MTEB leaderboard. The Massive Text Embedding Benchmark (MTEB) evaluates text embedding models across a wide range of tasks and datasets. MTEB encompasses 8 different embedding tasks, covering a total of 58 datasets and 112 languages. In this benchmark, 33 different text embedding models were evaluated on the MTEB tasks. A key finding from the benchmark was that no single text embedding method emerged as the clear leader across all tasks and datasets. Each model exhibited strengths and weaknesses depending on the specific embedding task and data characteristics. This highlights the need for continued research into developing more versatile and robust text embedding techniques that can perform well across diverse use cases and language domains.

Although this is a useful benchmark, we caution our enterprise customers with the following considerations:

Although the MTEB leaderboard is widely recognized, it provides only a partial assessment by focusing solely on accuracy metrics and overlooking crucial practical factors like inference latency and model capabilities. The leaderboard rankings combine and compare embedding models across different vector dimensions, making direct and fair model comparisons challenging.

Additionally, the leaders on this accuracy-centric leaderboard change frequently as new models are continually introduced, providing a shifting and incomplete perspective on practical model performance trade-offs that real-world applications must consider beyond just accuracy numbers.

Lastly, costs need to be weighed against the expected benefits and performance improvements in the specific use case. A small gain in accuracy may not justify the significant overhead and opportunity costs of transitioning embeddings models, especially in large-scale, business-critical applications. Enterprises should perform a rigorous cost-benefit analysis to make sure the projected performance uplift from an updated embeddings model provides sufficient return on investment (ROI) to offset the migration costs and operational disruption.

In summary, start with evaluating the benchmark scores, but don’t decide until you have done your own due diligence.

Benchmark results

The Amazon Titan Text Embeddings V2 model has the ability to output embeddings of various size. This implies that if you use a lower size, you’ll reduce your memory footprint, which will translate directly into cost savings. The default size is 1024, compared to V1, which is an 1536 output size, implying a direct cost reduction of approximately 33%, which translates into savings given the cost of a RAG solution has a major component in the form of a vector databases. In our internal testing, we found that using the 256-output token resulted in only about 3.24% accuracy loss while translating to a four times saving due to size reduction. Running our evaluation on MTEB datasets, we found Amazon Titan Text Embeddings V2 to perform competitively with scores like 57.5 on reranking tasks, for example. With the model trained on over 100 languages, it’s no surprise the model achieves scores like 55 on the MIRACL multilingual dataset and has an overall weighted average MTEB score of 60.37. Full MTEB scores are available on the MTEB leaderboard.

However, we strongly encourage you to run your own benchmarks with your own dataset to understand the operational metrics. A sample notebook showing how to run the benchmarks against the MTEB datasets is hosted here. The key steps involved are:

Choose a representative set of data to embed and keywords to search.

Use the Amazon Titan Text Embeddings V2 model to embed your data and keywords, adjusting the chunk size and overlap as needed.

Carry out a similarity search using your preferred vector comparison method (such as Euclidean distance or cosine similarity).

Use Amazon Titan Text Embeddings V2 on Amazon Bedrock

The new Amazon Titan Text Embeddings V2 model is available through the fully managed, serverless experience on Amazon Bedrock. You can use the model through either the Amazon Bedrock REST API or the AWS SDK. The required parameters are the text that you want to generate the embeddings of and the modelID parameter, which represents the name of the Amazon Titan Text Embeddings model. Furthermore, now you can specify the output size of the vector, which is a significant feature of the V2 model.

Throughput has been a key requirement for running large ingestion workloads, and the Amazon Titan Text Embeddings model supports batching via Bedrock Batch to increase the throughput for your workloads. The following code is an example using the AWS SDK for Python (Boto3):

The full notebook is available at on the Github Repo.

With Amazon Titan Text Embeddings, you can input up to 8,192 tokens, allowing you to work with phrases or entire documents based on your use case. The model returns output vectors of a range of dimensions from 256–1024 without sacrificing accuracy, while also optimizing for cost storage and low latency. Typically, you will find larger content window models tuned for accuracy while sacrificing latency because they’re typically used in asynchronous workloads. However, with its larger content window, Amazon Titan Text Embeddings is able to achieve low latency, and with batching, it gives higher throughput for your workloads.

Run your own benchmarking

We always encourage our customers to perform their own benchmarking using their documents or the standard MTEB datasets and evaluation. For a sample of how to use the MTEB, see the GitHub repo. This notebook shows you how to load the dataset and set up evaluation for your specific use case (task) and run the benchmarking. If you run the benchmarking with your dataset, the typical steps involved are:

Use the Amazon Titan Text Embeddings V2 model to embed your data and keywords, adjusting the chunk size and overlap as needed.

Run similarity searches using your preferred distance metrics based on your choice of vector database.

A sample notebook showing how to use an in-memory database is available in the GitHub repo. This is a sample setup and should not be used for your production workloads where you would be connecting to robust vector database offerings like Amazon OpenSearch Serverless.

Migrate to Amazon Titan Text Embeddings V2

The cost and performance advantages provided by the V2 model are compelling reasons to consider reindexing your existing vector embeddings using V2. Let’s explore a few examples to illustrate the potential benefits, focusing solely on embedding costs.

Use case 1: High volume of searches

This first use case pertains to customers with a high volume of searches. The details are as follows:

Scenario:

1 million documents, 100 million chunks, 1,000 average tokens per chunk

100,000 searches per day, 1,000 token size for search

One-time cost:

Number of tokens: 100,000 million

Price per million tokens: $0.02

Reindexing cost: 100,000 * $0.02 = $2,000

Ongoing monthly savings (compared to V1):

Tokens embedded per month: 30 * 100,000 * 1,000 = 3,000 million

Savings per month (when migrating from V1 to V2): 3,000 * ($0.1 – $0.02) = $240

For this use case, the one-time reindexing cost of $2,000 will likely break even within 8–9 months through the ongoing monthly savings.

Use case 2: Ongoing indexing

This use case is for customers with ongoing indexing. The details are as follows:

Scenario:

500,000 documents, 50 million chunks, average 1,000 tokens per chunk

10,000 (2%) new documents added per month

1,000 searches per day, 1,000 token size for search

One-time cost:

Number of tokens: 50,000 million

Price per million tokens: $0.02

Reindexing cost: 50,000 * $0.02 = $1,000

Ongoing monthly savings (compared to V1):

Tokens embedded per month for storage: 1,000 * 1,000 * 1,000 = 1,000 million

Tokens embedded per month for search: 30 * 1,000 * 1,000 = 30 million

Savings per month (vs. V1): 1,030 * ($0.1 – $0.02) = $82.4

For this use case, the one-time reindexing cost of $1,000 nets an estimated monthly savings of $82.4.

These calculations do not account for the additional savings due to the reduced storage size (up to four times) with V2. This could translate into further cost savings in terms of your vector database storage requirements. The extent of these savings will vary depending on your specific data storage needs.

Conclusion

In this post, we introduced the new Amazon Titan Text Embeddings V2 model, with superior performance across various use cases like retrieval, reranking, and multilingual tasks. You can potentially realize substantial cost savings and performance improvements by reindexing your vector embeddings using the V2 model. The specific benefits will vary based on factors such as the volume of data, search traffic, and storage requirements, but the examples discussed in this post illustrate the potential value proposition. Amazon Titan Text Embeddings V2 is available today in the us-east-1 and us-west-2 AWS Regions.

About the authors

Shreyas Subramanian is a Principal AI/ML specialist Solutions Architect, and helps customers by using Machine Learning to solve their business challenges using the AWS platform. Shreyas has a background in large scale optimization and Machine Learning, and in use of Machine Learning and Reinforcement Learning for accelerating optimization tasks.

Rupinder Grewal is a Senior AI/ML Specialist Solutions Architect with AWS. He currently focuses on serving of models and MLOps on Amazon SageMaker. Prior to this role, he worked as a Machine Learning Engineer building and hosting models. Outside of work, he enjoys playing tennis and biking on mountain trails.

Pradeep Sridharan is a Senior Solutions Architect at AWS. He has years of experience in digital business transformation—designing and implementing solutions to drive market competitiveness and revenue growth across multiple sectors. He specializes in AI/ML, Data Analytics and Application Modernization and Migration. Pradeep is based in Arizona (US).

Anuradha Durfee is a Senior Product Manager at AWS working on generative AI. She has spent the last five years working on natural language understanding and is motivated by enabling life-like conversations between humans and technology. Anuradha is based in Boston, MA.

The Science of Perception & How we Hallucinate our Own Reality

Originally Published on Stefan Speaks

Continue reading on Becoming Human: Artificial Intelligence Magazine »

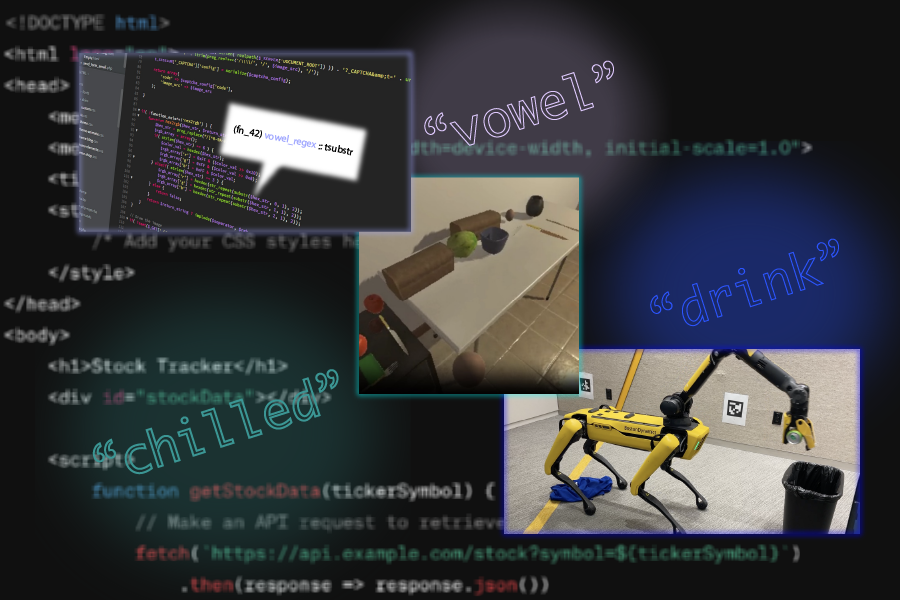

Natural language boosts LLM performance in coding, planning, and robotics

Large language models (LLMs) are becoming increasingly useful for programming and robotics tasks, but for more complicated reasoning problems, the gap between these systems and humans looms large. Without the ability to learn new concepts like humans do, these systems fail to form good abstractions — essentially, high-level representations of complex concepts that skip less-important details — and thus sputter when asked to do more sophisticated tasks.

Luckily, MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) researchers have found a treasure trove of abstractions within natural language. In three papers to be presented at the International Conference on Learning Representations this month, the group shows how our everyday words are a rich source of context for language models, helping them build better overarching representations for code synthesis, AI planning, and robotic navigation and manipulation.

The three separate frameworks build libraries of abstractions for their given task: LILO (library induction from language observations) can synthesize, compress, and document code; Ada (action domain acquisition) explores sequential decision-making for artificial intelligence agents; and LGA (language-guided abstraction) helps robots better understand their environments to develop more feasible plans. Each system is a neurosymbolic method, a type of AI that blends human-like neural networks and program-like logical components.

LILO: A neurosymbolic framework that codes

Large language models can be used to quickly write solutions to small-scale coding tasks, but cannot yet architect entire software libraries like the ones written by human software engineers. To take their software development capabilities further, AI models need to refactor (cut down and combine) code into libraries of succinct, readable, and reusable programs.

Refactoring tools like the previously developed MIT-led Stitch algorithm can automatically identify abstractions, so, in a nod to the Disney movie “Lilo & Stitch,” CSAIL researchers combined these algorithmic refactoring approaches with LLMs. Their neurosymbolic method LILO uses a standard LLM to write code, then pairs it with Stitch to find abstractions that are comprehensively documented in a library.

LILO’s unique emphasis on natural language allows the system to do tasks that require human-like commonsense knowledge, such as identifying and removing all vowels from a string of code and drawing a snowflake. In both cases, the CSAIL system outperformed standalone LLMs, as well as a previous library learning algorithm from MIT called DreamCoder, indicating its ability to build a deeper understanding of the words within prompts. These encouraging results point to how LILO could assist with things like writing programs to manipulate documents like Excel spreadsheets, helping AI answer questions about visuals, and drawing 2D graphics.

“Language models prefer to work with functions that are named in natural language,” says Gabe Grand SM ’23, an MIT PhD student in electrical engineering and computer science, CSAIL affiliate, and lead author on the research. “Our work creates more straightforward abstractions for language models and assigns natural language names and documentation to each one, leading to more interpretable code for programmers and improved system performance.”

When prompted on a programming task, LILO first uses an LLM to quickly propose solutions based on data it was trained on, and then the system slowly searches more exhaustively for outside solutions. Next, Stitch efficiently identifies common structures within the code and pulls out useful abstractions. These are then automatically named and documented by LILO, resulting in simplified programs that can be used by the system to solve more complex tasks.

The MIT framework writes programs in domain-specific programming languages, like Logo, a language developed at MIT in the 1970s to teach children about programming. Scaling up automated refactoring algorithms to handle more general programming languages like Python will be a focus for future research. Still, their work represents a step forward for how language models can facilitate increasingly elaborate coding activities.

Ada: Natural language guides AI task planning

Just like in programming, AI models that automate multi-step tasks in households and command-based video games lack abstractions. Imagine you’re cooking breakfast and ask your roommate to bring a hot egg to the table — they’ll intuitively abstract their background knowledge about cooking in your kitchen into a sequence of actions. In contrast, an LLM trained on similar information will still struggle to reason about what they need to build a flexible plan.

Named after the famed mathematician Ada Lovelace, who many consider the world’s first programmer, the CSAIL-led “Ada” framework makes headway on this issue by developing libraries of useful plans for virtual kitchen chores and gaming. The method trains on potential tasks and their natural language descriptions, then a language model proposes action abstractions from this dataset. A human operator scores and filters the best plans into a library, so that the best possible actions can be implemented into hierarchical plans for different tasks.

“Traditionally, large language models have struggled with more complex tasks because of problems like reasoning about abstractions,” says Ada lead researcher Lio Wong, an MIT graduate student in brain and cognitive sciences, CSAIL affiliate, and LILO coauthor. “But we can combine the tools that software engineers and roboticists use with LLMs to solve hard problems, such as decision-making in virtual environments.”

When the researchers incorporated the widely-used large language model GPT-4 into Ada, the system completed more tasks in a kitchen simulator and Mini Minecraft than the AI decision-making baseline “Code as Policies.” Ada used the background information hidden within natural language to understand how to place chilled wine in a cabinet and craft a bed. The results indicated a staggering 59 and 89 percent task accuracy improvement, respectively.

With this success, the researchers hope to generalize their work to real-world homes, with the hopes that Ada could assist with other household tasks and aid multiple robots in a kitchen. For now, its key limitation is that it uses a generic LLM, so the CSAIL team wants to apply a more powerful, fine-tuned language model that could assist with more extensive planning. Wong and her colleagues are also considering combining Ada with a robotic manipulation framework fresh out of CSAIL: LGA (language-guided abstraction).

Language-guided abstraction: Representations for robotic tasks

Andi Peng SM ’23, an MIT graduate student in electrical engineering and computer science and CSAIL affiliate, and her coauthors designed a method to help machines interpret their surroundings more like humans, cutting out unnecessary details in a complex environment like a factory or kitchen. Just like LILO and Ada, LGA has a novel focus on how natural language leads us to those better abstractions.

In these more unstructured environments, a robot will need some common sense about what it’s tasked with, even with basic training beforehand. Ask a robot to hand you a bowl, for instance, and the machine will need a general understanding of which features are important within its surroundings. From there, it can reason about how to give you the item you want.

In LGA’s case, humans first provide a pre-trained language model with a general task description using natural language, like “bring me my hat.” Then, the model translates this information into abstractions about the essential elements needed to perform this task. Finally, an imitation policy trained on a few demonstrations can implement these abstractions to guide a robot to grab the desired item.

Previous work required a person to take extensive notes on different manipulation tasks to pre-train a robot, which can be expensive. Remarkably, LGA guides language models to produce abstractions similar to those of a human annotator, but in less time. To illustrate this, LGA developed robotic policies to help Boston Dynamics’ Spot quadruped pick up fruits and throw drinks in a recycling bin. These experiments show how the MIT-developed method can scan the world and develop effective plans in unstructured environments, potentially guiding autonomous vehicles on the road and robots working in factories and kitchens.

“In robotics, a truth we often disregard is how much we need to refine our data to make a robot useful in the real world,” says Peng. “Beyond simply memorizing what’s in an image for training robots to perform tasks, we wanted to leverage computer vision and captioning models in conjunction with language. By producing text captions from what a robot sees, we show that language models can essentially build important world knowledge for a robot.”

The challenge for LGA is that some behaviors can’t be explained in language, making certain tasks underspecified. To expand how they represent features in an environment, Peng and her colleagues are considering incorporating multimodal visualization interfaces into their work. In the meantime, LGA provides a way for robots to gain a better feel for their surroundings when giving humans a helping hand.

An “exciting frontier” in AI

“Library learning represents one of the most exciting frontiers in artificial intelligence, offering a path towards discovering and reasoning over compositional abstractions,” says assistant professor at the University of Wisconsin-Madison Robert Hawkins, who was not involved with the papers. Hawkins notes that previous techniques exploring this subject have been “too computationally expensive to use at scale” and have an issue with the lambdas, or keywords used to describe new functions in many languages, that they generate. “They tend to produce opaque ‘lambda salads,’ big piles of hard-to-interpret functions. These recent papers demonstrate a compelling way forward by placing large language models in an interactive loop with symbolic search, compression, and planning algorithms. This work enables the rapid acquisition of more interpretable and adaptive libraries for the task at hand.”

By building libraries of high-quality code abstractions using natural language, the three neurosymbolic methods make it easier for language models to tackle more elaborate problems and environments in the future. This deeper understanding of the precise keywords within a prompt presents a path forward in developing more human-like AI models.

MIT CSAIL members are senior authors for each paper: Joshua Tenenbaum, a professor of brain and cognitive sciences, for both LILO and Ada; Julie Shah, head of the Department of Aeronautics and Astronautics, for LGA; and Jacob Andreas, associate professor of electrical engineering and computer science, for all three. The additional MIT authors are all PhD students: Maddy Bowers and Theo X. Olausson for LILO, Jiayuan Mao and Pratyusha Sharma for Ada, and Belinda Z. Li for LGA. Muxin Liu of Harvey Mudd College was a coauthor on LILO; Zachary Siegel of Princeton University, Jaihai Feng of the University of California at Berkeley, and Noa Korneev of Microsoft were coauthors on Ada; and Ilia Sucholutsky, Theodore R. Sumers, and Thomas L. Griffiths of Princeton were coauthors on LGA.

LILO and Ada were supported, in part, by MIT Quest for Intelligence, the MIT-IBM Watson AI Lab, Intel, U.S. Air Force Office of Scientific Research, the U.S. Defense Advanced Research Projects Agency, and the U.S. Office of Naval Research, with the latter project also receiving funding from the Center for Brains, Minds and Machines. LGA received funding from the U.S. National Science Foundation, Open Philanthropy, the Natural Sciences and Engineering Research Council of Canada, and the U.S. Department of Defense.

Simple guide to training Llama 2 with AWS Trainium on Amazon SageMaker

Large language models (LLMs) are making a significant impact in the realm of artificial intelligence (AI). Their impressive generative abilities have led to widespread adoption across various sectors and use cases, including content generation, sentiment analysis, chatbot development, and virtual assistant technology. Llama2 by Meta is an example of an LLM offered by AWS. Llama 2 is an auto-regressive language model that uses an optimized transformer architecture and is intended for commercial and research use in English. It comes in a range of parameter sizes—7 billion, 13 billion, and 70 billion—as well as pre-trained and fine-tuned variations. To learn more about Llama 2 on AWS, refer to Llama 2 foundation models from Meta are now available in Amazon SageMaker JumpStart.

Many practitioners fine-tune or pre-train these Llama 2 models with their own text data to improve accuracy for their specific use case. However, in some cases, a challenge arises for practitioners: the high cost of fine-tuning and training. As organizations strive to push the boundaries of what LLMs can achieve, the demand for cost-effective training solutions has never been more pressing. In this post, we explore how you can use the Neuron distributed training library to fine-tune, continuously pre-train, and reduce the cost of training LLMs such as Llama 2 with AWS Trainium instances on Amazon SageMaker.

AWS Trainium instances for training workloads

SageMaker ml.trn1 and ml.trn1n instances, powered by Trainium accelerators, are purpose-built for high-performance deep learning training and offer up to 50% cost-to-train savings over comparable training optimized Amazon Elastic Compute Cloud (Amazon EC2) instances. This post implements a solution with the ml.trn1.32xlarge Trainium instance type, typically used for training large-scale models. However, there are also comparable ml.trn1n instances that offer twice as much networking throughput (1,600 Gbps) via Amazon Elastic Fabric Adapter (EFAv2). SageMaker Training supports the availability of ml.trn1 and ml.trn1n instances in the US East (N. Virginia) and US West (Oregon) AWS Regions, and most recently announced general availability in the US East (Ohio) Region. These instances are available in the listed Regions with On-Demand, Reserved, and Spot Instances, or additionally as part of a Savings Plan.

For more information on Trainium Accelerator chips, refer to Achieve high performance with lowest cost for generative AI inference using AWS Inferentia2 and AWS Trainium on Amazon SageMaker. Additionally, check out AWS Trainium Customers to learn more about customer testimonials, or see Amazon EC2 Trn1 Instances for High-Performance Model Training are Now Available to dive into the accelerator highlights and specifications.

Using the Neuron Distributed library with SageMaker

SageMaker is a fully managed service that provides developers, data scientists, and practitioners the ability to build, train, and deploy machine learning (ML) models at scale. SageMaker Training includes features that improve and simplify the ML training experience, including managed infrastructure and images for deep learning, automatic model tuning with hyperparameter optimization, and a pay-for-what-you-use billing structure. This section highlights the advantages of using SageMaker for distributed training with the Neuron Distributed library—specifically, the managed infrastructure, time-to-train, and cost-to-train benefits of its associated resiliency and recovery features, and is part of the AWS Neuron SDK used to run deep learning workloads on AWS Inferentia and AWS Trainum based instances.

In high performance computing (HPC) clusters, such as those used for deep learning model training, hardware resiliency issues can be a potential obstacle. Although hardware failures while training on a single instance may be rare, issues resulting in stalled training become more prevalent as a cluster grows to tens or hundreds of instances. Regular checkpointing helps mitigate wasted compute time, but engineering teams managing their own infrastructure must still closely monitor their workloads and be prepared to remediate a failure at all hours to minimize training downtime. The managed infrastructure of SageMaker Training includes several resiliency features that make this monitoring and recovery process streamlined:

Cluster health checks – Before a training job starts, SageMaker runs health checks and verifies communication on the provisioned instances. It then replaces any faulty instances, if necessary, to make sure the training script starts running on a healthy cluster of instances. Health checks are currently enabled for the TRN1 instance family as well as P* and G* GPU-based instance types.

Automatic checkpointing – Checkpoints from a local path (/opt/ml/checkpoints by default) are automatically copied to an Amazon Simple Storage Service (Amazon S3) location specified by the user. When training is restarted, SageMaker automatically copies the previously saved checkpoints from the S3 location back to the local checkpoint directory to make sure the training script can load and resume the last saved checkpoint.

Monitoring and tracking training – In the case of a node failure, it’s important to have the visibility of where the failure occurs. Using PyTorch Neuron gives data scientists the ability to track training progress in a TensorBoard. This allows you to capture the loss of the training job to determine when the training job should be stopped to identify the convergence of the model for optimal training.

Built-in retries and cluster repair – You can configure SageMaker to automatically retry training jobs that fail with a SageMaker internal server error (ISE). As part of retrying a job, SageMaker replaces any instances that encountered unrecoverable errors with fresh instances, reboots all healthy instances, and starts the job again. This results in faster restarts and workload completion. Cluster update is currently enabled for the TRN1 instance family as well as P and G GPU-based instance types. Practitioners can add in their own applicative retry mechanism around the client code that submits the job, to handle other types of launch errors, such as like exceeding your account quota.

For customers working with large clusters of hundreds of instances for a training job, the resiliency and recovery features of SageMaker Training can reduce total time for a model to converge by up to 20% via fewer failures and faster recovery. This also enables engineering teams to monitor and react to failures at all hours. Although SageMaker training jobs are suitable for general-purpose training use cases with customizable configurations and integration with the broader AWS ecosystem, Amazon SageMaker HyperPod is specifically optimized for efficient and resilient training of foundation models at scale. For more information on SageMaker HyperPod use cases, refer to the SageMaker HyperPod developer guide.

In this post, we use the Neuron Distributed library to continuously pre-train a Llama 2 model using tensor and pipeline parallelism using SageMaker training jobs. To learn more about the resiliency and recovery features of SageMaker Training, refer to Training large language models on Amazon SageMaker: Best practices.

Solution overview

In this solution, we use an ml.t3.medium instance type on a SageMaker Jupyter notebook to process the provided cells. We will be continuously pre-training our llama2-70b model using the trn1.32xlarge Trainium instance. First, let’s familiarize ourselves with the techniques we use to handle the distribution of the training job created in our solution to contiuously pre-train our llama2-70b model using the Neuron distributed training library.

The techniques used to convert the pre-trained weights in the convert_pretrained_weights.ipynb notebook into a .pt (PyTorch) weights file are called pipeline parallelism and tensor parallelism:

Pipeline parallelism involves a training strategy that combines elements of pipeline parallelism to optimize the training process by splitting a batch or deep neural network into multiple microbatches or layers, allowing each stage worker to process one microbatch.

Tensor parallelism splits tensors of a neural network into multiple devices. This technique allows models with large tensors that can’t fit into the memory of a single device.

After we convert our pre-trained weights with the preceding techniques in our first notebook, we follow two separate notebooks in the same sagemaker-trainium-examples folder. The second notebook is Training_llama2_70b.ipynb, which walks through the continuous pre-training process by saving our checkpoint of converted model weights in the first notebook and prepping it for inference. When this step is complete, we can run the Convert_Nxd_to_hf.ipynb notebook, which takes our pre-trained weights using the NeuronX library and converts it into a readable format in Hugging Face to serve inference.

Prerequisites

You need to complete some prerequisites before you can run the first notebook.

First, make sure you have created a Hugging Face access token so you can download the Hugging Face tokenizer to be used later. After you have the access token, you need to make a few quota increase requests for SageMaker. You need to request a minimum of 8 Trn1 instances ranging to a maximum of 32 Trn1 instances (depending on time-to-train and cost-to-train trade-offs for your use case).

On the Service Quotas console, request the following SageMaker quotas:

Trainium instances (ml.trn1.32xlarge) for training job usage: 8–32

ml.trn1.32xlarge for training warm pool usage: 8–32

Maximum number of instances per training job: 8–32

It may take up to 24 hours for the quota increase to get approved. However, after submitting the quota increase, you can go to the sagemaker-trainium-examples GitHub repo and locate the convert_pretrained_weights.ipynb file. This is the file that you use to begin the continual pre-training process.

Now that you’re ready to begin the process to continuously pre-train the llama2-70b model, you can convert the pre-trained weights in the next section to prep the model and create the checkpoint.

Getting started

Complete the following steps:

Install all the required packages and libraries: SageMaker, Boto3, transformers, and datasets.

These packages make sure that you can set up your environment to access your pre-trained Llama 2 model, download your tokenizer, and get your pre-training dataset.

After the packages are installed, retrieve your Hugging Face access token, and download and define your tokenizer.

The tokenizer meta-llama/Llama-2-70b-hf is a specialized tokenizer that breaks down text into smaller units for natural language processing. This tokenized data will later be uploaded into Amazon S3 to allow for running your training job.

After following the above cells, you will now download the wikicorpus dataset from the Hugging Face dataset.

Tokenize the dataset with the llama-2 tokenizer that you just initialized.

By tokenizing the data, you’re preparing to pre-train your Llama 2 model to enhance the model’s performance to expose it to the trilingual (Catalan, English, Spanish) text data in the wikicorpus dataset to learn intricate patterns and relationships in the dataset.

After the data is tokenized, run the following cell to store the training dataset to s3:

The cell above makes sure that you define the training_input_path and have uploaded the data to your S3 bucket. You’re now ready to begin the training job process.

Run the training job

For the training job, we use the trn1.32xlarge instances with each of the instances having 32 neuron cores. We use tensor parallelism and pipeline parallelism, which allows you to shard the model across Neuron cores for training.

The following code is the configuration for pretraining llama2-70b with trn1:

Now you can define the hyperparameters for training. Note that adjusting these parameters based on hardware capabilities, dataset characteristics, and convergence requirements can significantly impact training performance and efficiency.

The following is the code for the hyperparameters:

Now you specify the Docker image that will be used to train the model on Trainium:

The image we defined is designed for PyTorch training with Neuron optimizations. This image is configured to work with PyTorch, using Neuron SDK version 2.18.0 for enhanced performance and efficiency on Trn1 instances equipped with AWS Trainium chips. This image is also compatible with Python 3.10, indicated by the py310, and is based on Ubuntu 20.04.

Prior to starting your training job, you need to configure it by defining all necessary variables. You do so by defining the training job name, checkpoint directory, and cache directory:

The parameters enable you to do the following:

The training job allows you to identify and track individual training jobs based on timestamps

The checkpoint directory specifies the S3 URI where the checkpoint data, weights, and other information are stored for the trained model

The cache directory helps optimize the training process by storing and reusing previously calculated values, from the checkpoint directory, reducing redundancy and improving efficiency

The environment variables make sure that the training job is optimally configured and settings are tailored to enable efficient and effective training using features like RDMA, optimized memory allocation, fused operations, and Neuron-specific device optimizations

After you have defined your training job and configured all directories and environment variables for an optimal training pipeline, you now set up your PyTorch estimator to begin the training job on SageMaker. A SageMaker estimator is a high-level interface that handles the end-to-end SageMaker training and deployment tasks.

The entry_point is specified as the Python script run_llama_nxd.py. We use the instance_type ml.trn1.32xlarge, the instance count is 32 (which was previously defined as a global variable in the configuration code), and input_mode is set to FastFile. Fast File mode in SageMaker streams data from Amazon S3 on demand, which optimizes data loading performance by fetching data as needed, reducing overall resource consumption. For more information on input, refer to Access Training Data.

Finally, you can start the training job with the SageMaker fit() method, which trains the model based on the defined hyperparameters:

You have successfully started the process to continuously pre-train a llama2-70b model by converting pre-trained weights with tokenized data using SageMaker training on Trainium instances.

Continuous pre-training

After following the prerequisites, completing the provided notebook, and converting the pre-trained weights as a checkpoint, you can now begin the continual pre-training process, using the checkpoint as a point of reference to pre-train the llama2-70b model. The techniques used to convert the pre-trained weights in the convert_pretrained_weights.ipynb notebook into a .pt (PyTorch) weights file are called pipeline parallelism and tensor parallelism.

To begin the continuous pre-training process, follow the Training_llama2_70b.ipynb file in the sagemaker-trainium-examples repo.

Given the large size of the llama2-70b model, you need to convert the pre-trained weights into a more efficient and useable format (.pt). You can do so by defining the hyperparameters in your configuration to store converted weights and checkpoints. The following are the hyperparameters:

If you look at the hyperparameters, the output_dir is used as a reference for pre-training. If you are at this cell, you should have already followed the Training_llama2_70b.ipynb notebook and gone through the process of setting up your SageMaker client and Docker image, and preparing the pre-trained weights for pre-training. You’re now ready to perform the continuous pre-training process on the llama2-70b model.

We use the following parameters to take the pre-trained weights stored in output_dir in the convert_pretrained_weights.ipynb file to be reused continuously for pre-training:

After these hyperparameters are implemented, you can run the rest of the notebook cells to complete the continuous pre-training process. After the SageMaker estimator has completed the training job, you can locate the new checkpoint in the S3 checkpoint directory containing the weights. You can now locate the convert_Nxd_to_hf.ipynb file to get the checkpoint ready for inferencing.

Convert the Neuron Distributed checkpoint for inferencing

Checkpoints play a vital role in the context of distributed training with the NeuronX library because it has checkpoint compatibility with Hugging Face Transformers. You can get the training job output ready for inferencing by taking the training job that is saved as a NeuronX distributed checkpoint and converting the weights into .pt weights files.

To convert the checkpoints to Hugging Face format using NeuronX, you first need to save the S3 nxd_checkpoint_path directory:

After you save the checkpoint in the nxd_checkpoint_path directory, you can save your hyperparameters and configure your SageMaker estimator, which makes sure the pre-training process can begin. You can now run the fit() function within the estimator to convert the pre-trained weights into a checkpoint for inferencing with the following cell:

Summary